So I decided to pick my game development hobby back up. It’s been a while, about 15 years. Video games are actually why I started programming. In high school I wanted to make games and during summer break I’d stay up till morning working on them every night. I’ve still got that old code, well most of it. It’s horribly out of date though. So I’ve started re-writing some of my old shared code to target modern APIs. To kick it off I started with something simple, a brick breaker clone. I could have used an existing engine to do this in a fraction of the time, but I’ve always been fascinated with engine development, so I did it from scratch. Here’s a few things I learned along the way.

X11 Fullscreen

I was creating a window that covered the screen with override redirect on to do fullscreen. This works, but it’s the “old” way of doing it and I also wanted to toggle fullscreen on the fly. It’s feasible to toggle override redirect and move the window around to cover the screen. This is basically how I’m doing it on windows. But there’s a better way. There is a property named _NET_WM_STATE that contains a list of window states. Setting the list with XChangeProperty to the _NET_WM_STATE_FULLSCREEN atom makes the window fullscreen and removing the property with XDeleteProperty makes the window normal. What’s nice about this approach is the window manager is involved instead of being bypassed. The window manager will pick the right monitor for you and automatically restore the old window position/size when exiting fullscreen.

GTK+ Fullscreen

GTK+ conveniently has a fullscreen mode, which probably uses _NET_WM_STATE. However it won’t make the window fullscreen if it’s not resizable, which makes sense, but I want my window to have a fixed size until it’s fullscreened so I can control the aspect ratio. Simple fix though: just set gtk_window_resizable to true before using gtk_window_fullscreen right? Yes and no, the problem is that resizable doesn’t change immediately so you have to wait for it to sync with the window manager. My solution was to set resizable then set fullscreen and pump the message queue in a loop until fullscreen actually changes. I’d rather use a function that syncs changes with the window manager, but I haven’t found one yet. One problem with this is that the window manager is allowed to ignore the fullscreen request. So I’ll only try for a second before assuming the window manager denied my request. I could possibly wait for the resizable property to change instead of the fullscreen property, but I’m concerned that the property will change before it’s synced with the window manager.

DMG “Installer” and .DS_Store

Dmgs (Disk Images) are a pretty great way to redistribute Macos applications. Users just have to drag and drop the application bundle, no installer necessary. The dmg has a link to the applications folder so it’s easy to copy and it even has a nice background image. There are plenty of guides online for how to create a dmg file manually, but I wanted to create one from a script. No problem, I’ll use hdiutil to create a dmg from a folder. But that alone doesn’t work, the background doesn’t show up. Why doesn’t this work? I don’t know what kind of black magic goes on in .DS_Store files, but the background image is apparently not just a relative path to the file. You have to create a writable dmg file, create your template .DS_Store file with the dmg mounted, and copy it to your staging area. Trying to create the .DS_Store file in your staging area won’t work. The dmg guides tell you to do this of course, but not why, so unless you know about the .DS_Store magic you may assume, as I did, that any old .DS_Store file will do. Ultimately I manually created a template .DS_Store file and put it in source control so I could create the dmg file from a script with this template file.

Dynamic Library Symbol Visibility in Macos

The symbol visibility for libraries is hidden by default on Macos, more specifically that’s the clang default in XCode. This is great actually, since it means you don’t pollute your executable with symbols from your libraries. But it’s not what I was expecting fresh from the Linux port, which defaults to visible. Setting the visibility to default actually makes it visible, so the default visibility on Macos is not “default”. I didn’t catch this right away because the Debug build worked fine, and if it worked then what madness is it that the Release build doesn’t work. Well the debug build has testability on by default, which apparently also affects symbol visibility (so test code can access “internal” functions I assume). I flipped this option and my Release build started working. In the future I’ll probably set the visibility attribute in gcc/clang similar to how I use declspec dllexport/dllimport in msvc.

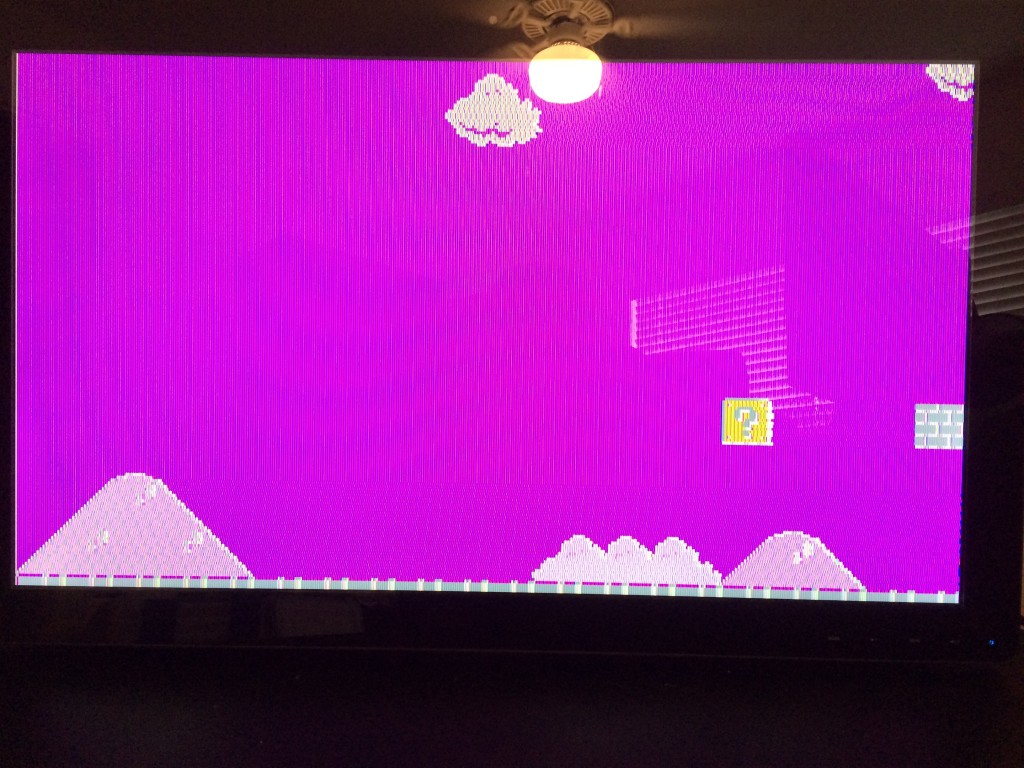

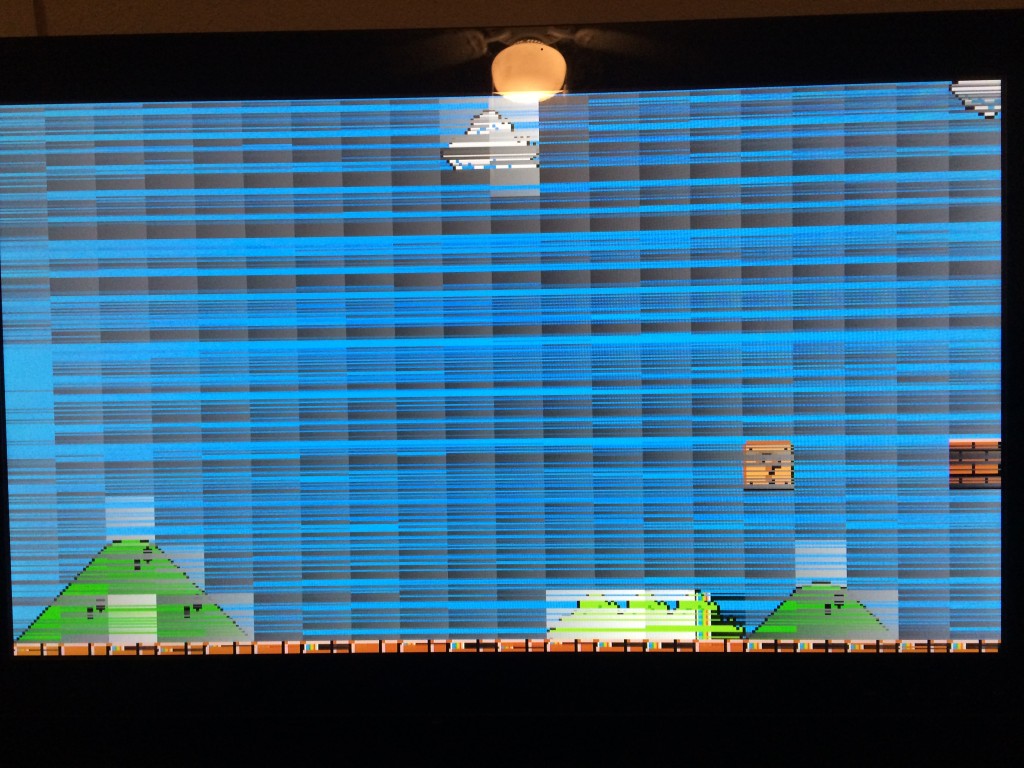

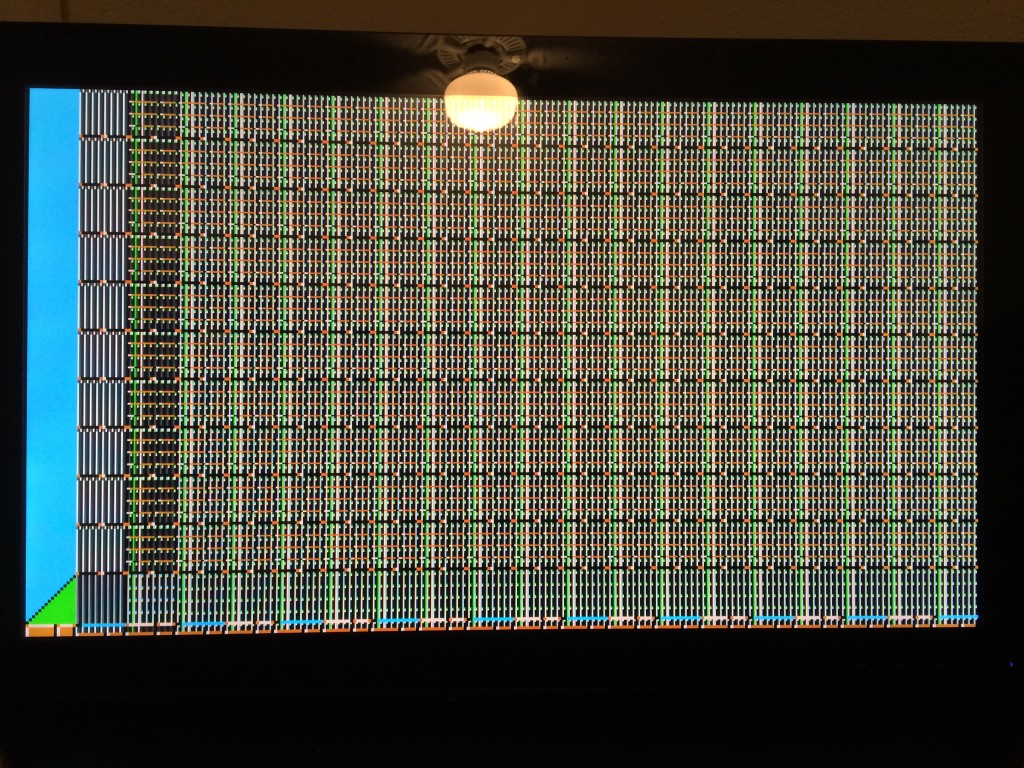

And now a screenshot:

Thrilling, absolutely thrilling.