I’ve decided to restart my website blog type thing to document a project I’ve been working on for over a year now. The next few posts will recap my project(s) since then.

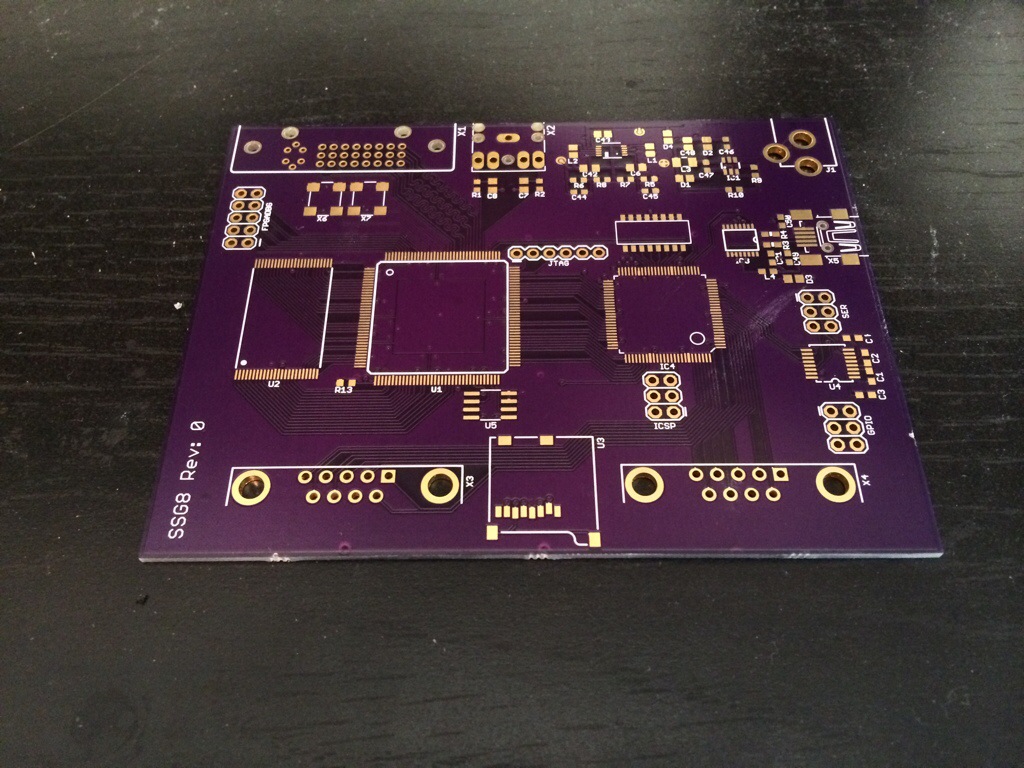

It all started with a friend of mine saying how surprised he was that the TurboGrafx-16 had some decent looking games even though it only had an 8-bit processor. I took this as a slight upon 8-bit processors everywhere. Modern 8-bit microcontrollers being quite powerful. So I decided to create a modern 8-bit game console with 16-bit like capabilities and see what it can do with it. That’s cheating of course, comparing an old 8-bit CPU to a modern one, but I don’t care. Plus I hadn’t done any board design, so this will be new and interesting.

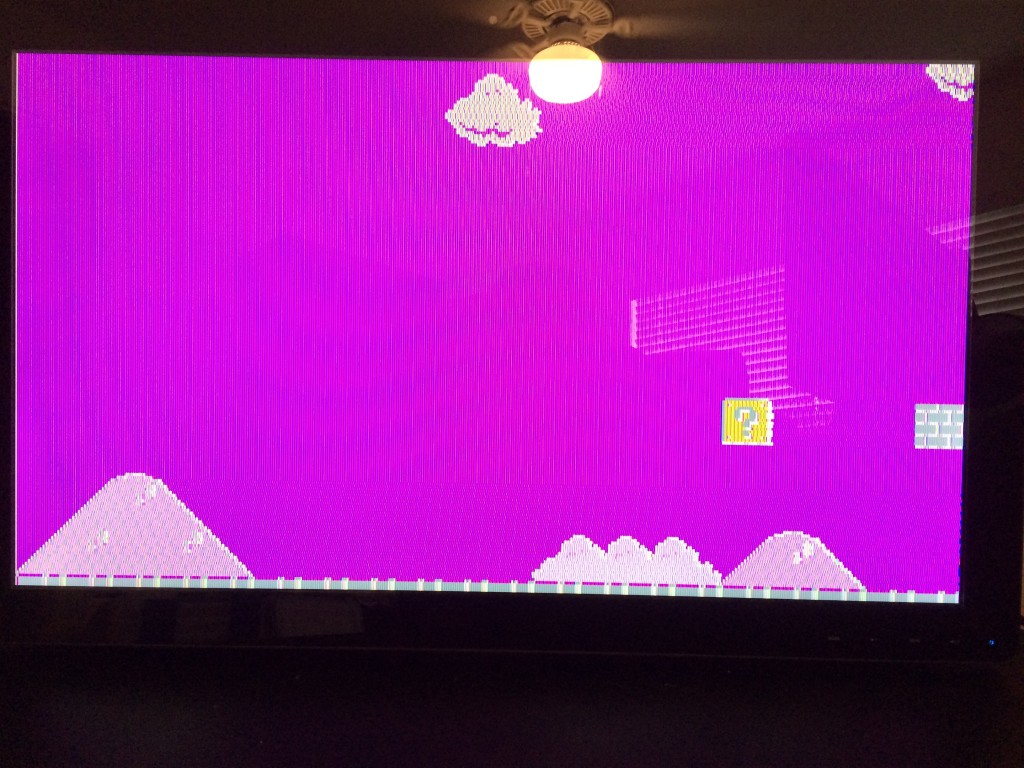

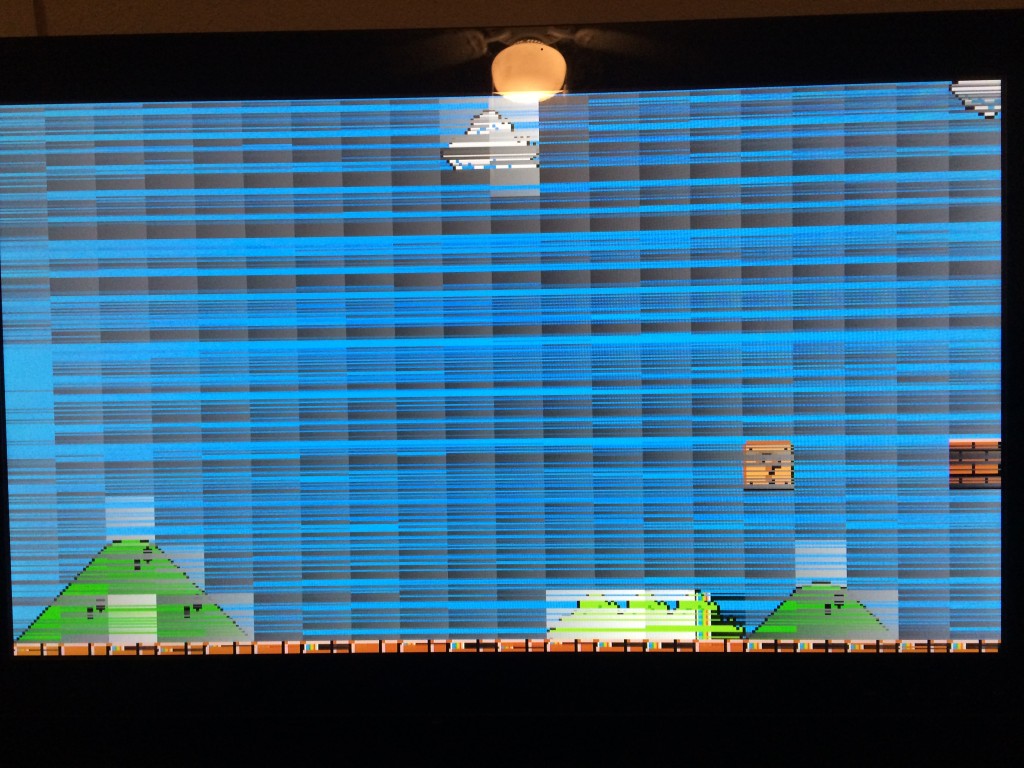

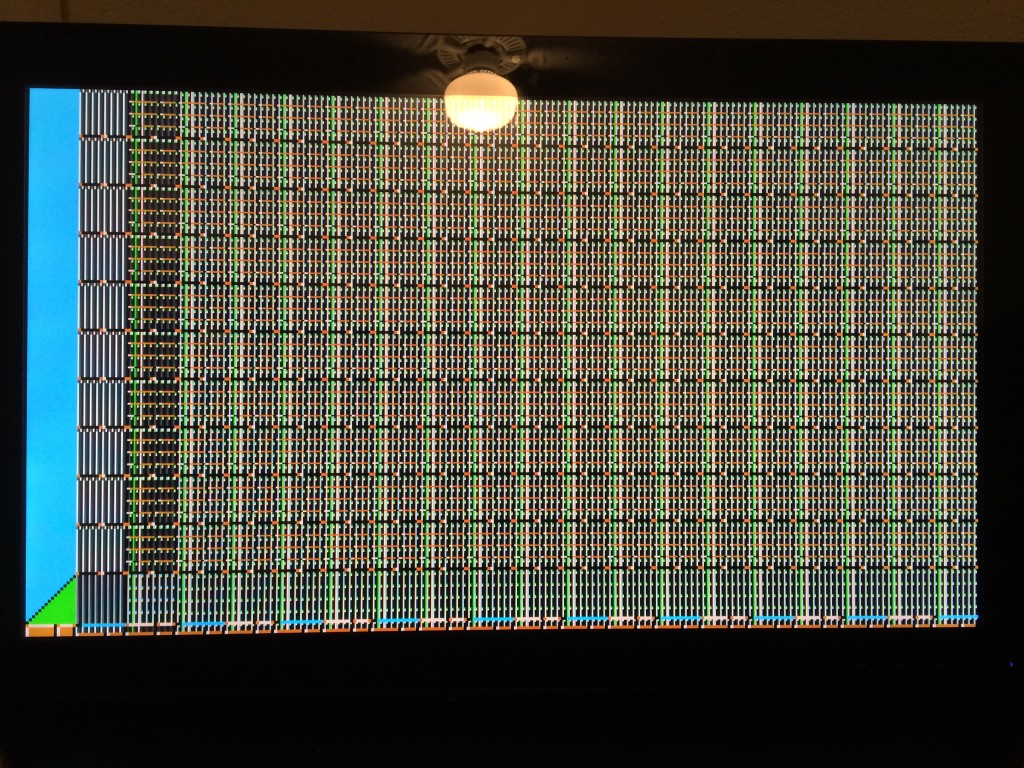

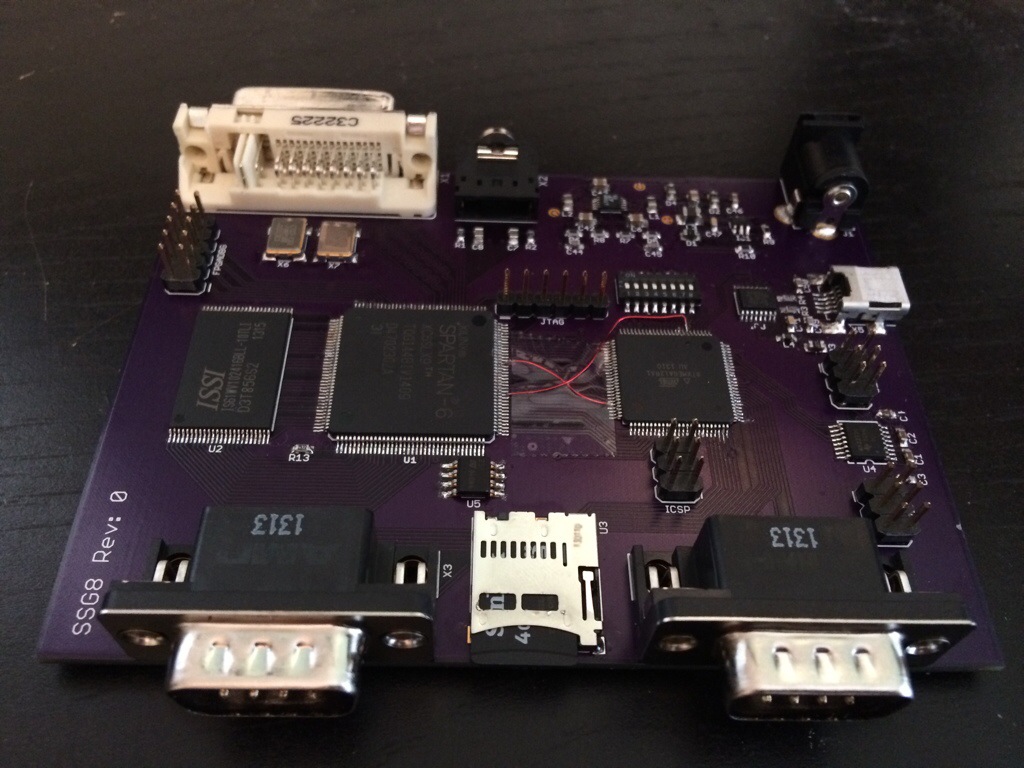

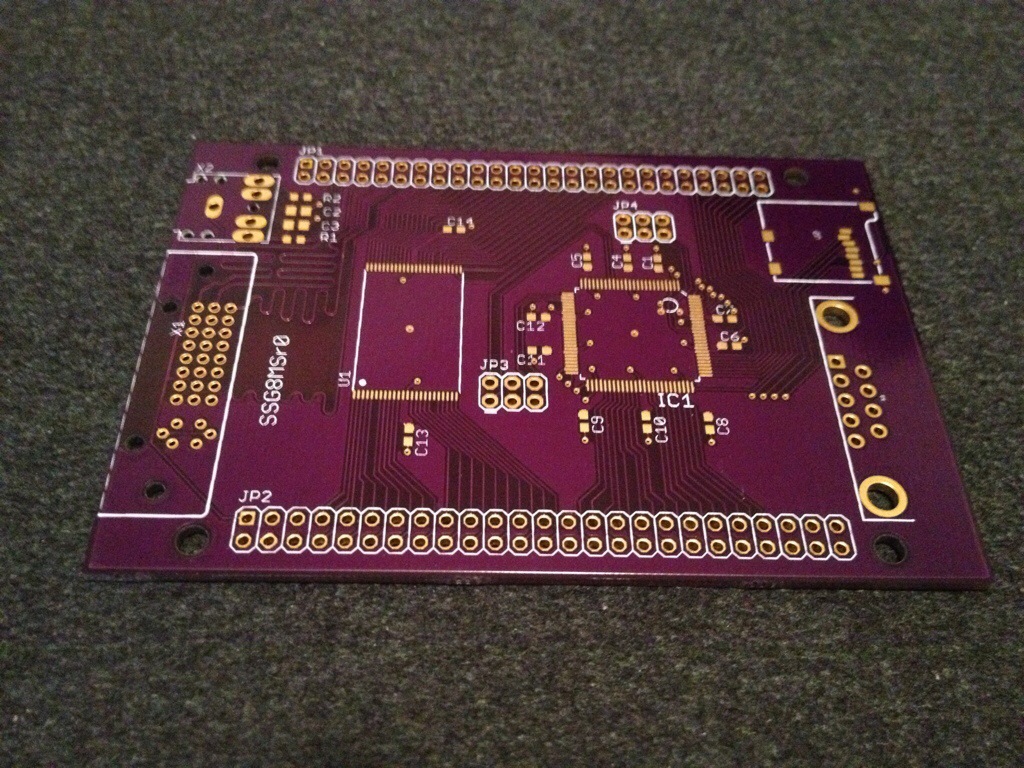

My first thought was to use a microcontroller to generate an NTSC signal. Some googling showed this was possible and had sucessfully been done before, but in each case the signal generated was, well, crappy. It’s impressive people have been able to get it to work at all, but the signal is either monocrome or low resolution. A microcontroller just isn’t fast enough to generate a full resolution color image without some external help. For instance a common frequency for providing data to an NTSC encoder is 27MHz, but the Atmel chip I settled on runs at 32MHz. That doesn’t leave any room for generating meaningful data. I needed something with lots of horsepower and accurate timing. What do you do when you need that, you get an FPGA. Another thing I hadn’t done before, more new and interesting. So I could have a microcontroller controlling an FPGA that feeds data to an external NTSC video encoder.

I’ll also need to make some noise. I’d already settled on an FPGA at this point, so I might as well have it also act as the SPU. I found some audio DACs using I2S, which is apparently a thing, and also awesome. I also considred using a seperate microcontroller with I2S or build in audio DACs. The ones I found either didn’t have as much memory as I calculated I’d need, or they were 32-bit. And that’s just too many bits, that’s cheating. The specific kind of cheating that I won’t do, or is it? The 68k in the Genisis looks like a 32-bit processor from software, even though it’s implemented with a 16-bit core. I’ll also need joysticks. I have a handful of NES/SNES/Genisis controllers in my collection I can use. Next up I’ll need a “cartrige.” Micro SD cards are ubiquitous and I’ve seen them interfaced with an Arduino before via SPI. So that will work.

I’ll also need more cowbell RAM. And this is where it gets messy. You need lots of signals to get RAM running. I have to consider what can I reasonably manufacture without it becoming prohibitively expensive for a hobby project. How many layers the board can have, what IC packages I can use. I can’t hand solder BGA, that would have to be professionally manufactured. Fully assembled one-off prototype boards are too expensive for me to stomach. 2-4 layer boards are relatively cheap to get manufactured and TQFP chips are hand solderable. Ideally I’d like to have seperate RAMs for the CPU and GPU/SPU with the GPU/SPU mapped into the CPU’s memory space. That poses three problems, routing that many signals on a relatively low density board, having enough pins in the FPGA, and finding a CPU with a flexible memory controller. There’s the Atmel chips with expandable data memory, Microchip chips with expandable program memory, and Zilog cips (makers of the venerable Z80) with expandable program and data memory. Ideally I’d like to have expandable prgram and data memory, so the Zilog chip is the clear winner. But do I have enough pins? No, no, I do not. The most usable pins I can get in a TQFP FPGA is about 100. The FPGA->RAM interface takes about 45 signals, the CPU to FPGA interface takes about 45 as well, the FPGA to audio DAC interface takes about 4, the FPGA to video encoder interface takes about 10, and by the way some of those 100 pins are dual use and really ought to be used just for configuration.

Expandable program memory is interesting, but that makes mapping the FPGA into the CPU’s memory space not feasible becuase of how program memory is modified. thank’s modified hardvard architecture. Expandable data memory is what I really want, though it does mean I have to update the MCUs flash to load a new game from the “cartrige.” The Atmel chip also has an SDRAM controller as well as an SRAM controller that can be used simultaniously with the SRAM controller having multiplexed signals so it uses fewer pins. This is awesome and perfect and only available on a variant of the chip they don’t sell and requires an SDRAM chip that’s not manufactured anymore. So my dream of having seperate RAMs just isn’t happening. The FPGA will have to demultiplex the MCU signals and act as an arbiter between the MCU/GPU/SPU and RAM. On top of this the Atmel chip’s memory controller doesn’t have an external wait signal, so I have to have fixed timing to the RAM, which limits me to an SRAM. The problem with trying to interface to DRAM from an SRAM interface is that DRAM requires refresh cycles. So the CPU needs to know it has to wait or be told to wait while this happens. Without a wait signal you have to assume the worse possible case (a DRAM refresh occurs right when the memory access is requested) and insert wait states to wait until the DRAM can respond. The worst case access time is 100 times slower than the typical access time. SRAM is easier to interface to, but much more expensive than DRAM and only available in smaller capacities.

So I’ve got a plan that will totally work and not have to change drastically.